Charbel-Raphael Segerie

An introduction to AI Safety

The rapid advancements in artificial intelligence is advancing quickly. While these technologies are awe-inspiring, models like ChatGPT or Bing Chat, although specifically developed to be polite and benevolent towards the user, can be easily manipulated.

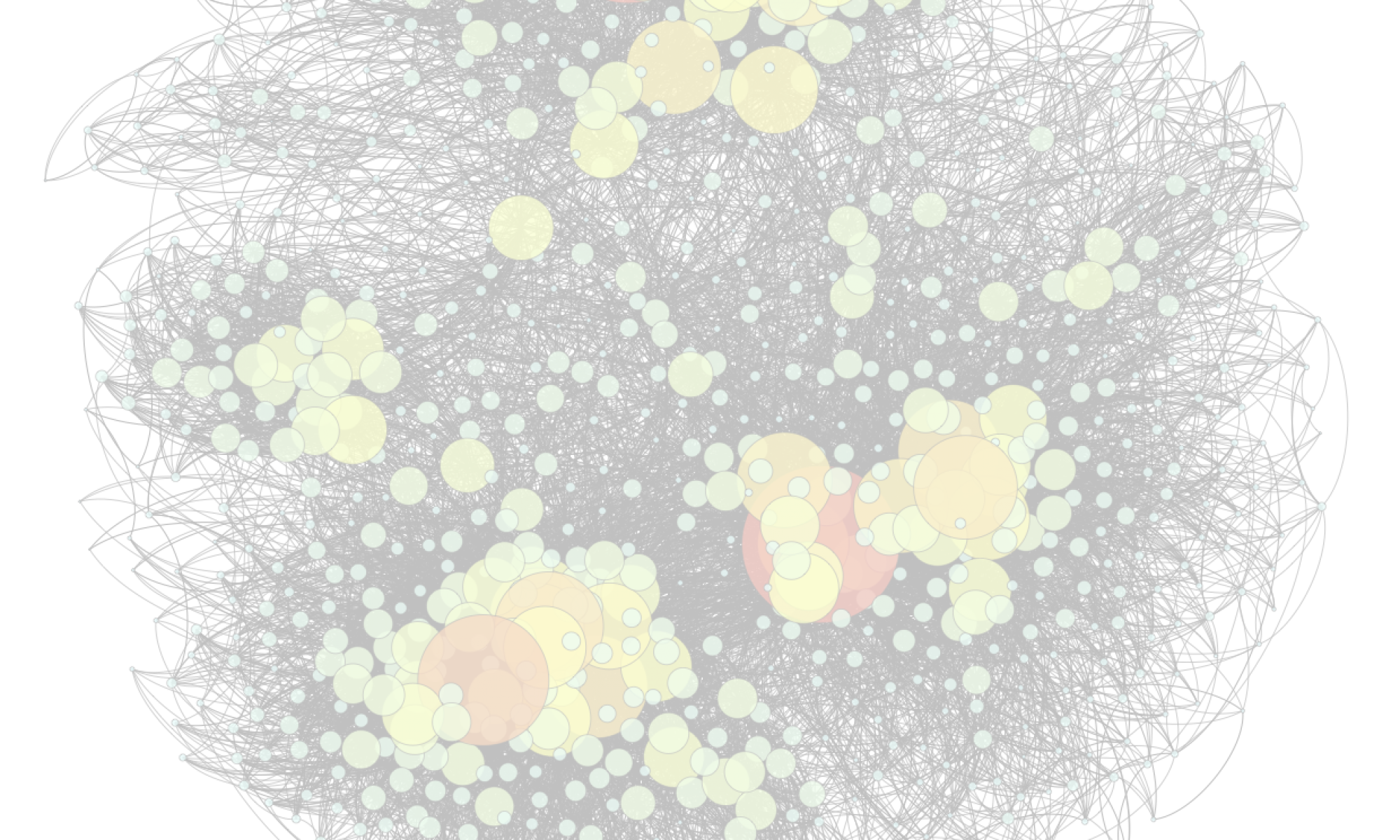

In this presentation, we will address these major technical flaws. These models remain large black boxes and we cannot guarantee that their actions will conform to our expectations. A second flaw is the lack of robustness; the models are trained on a particular dataset and must therefore generalize to new situations during their deployment. The fact that Bing Chat threatens users when it was trained to help them illustrates this failure of generalization. The third flaw lies in the difficulty of specifying precisely to a model the desired objective, given the complexity and diversity of human values.

Then, we will address different solution paradigms: Specification techniques with Reinforcement Learning (RLHF and its variations), interpretability (how information is represented in neural networks, robustly editing a language model’s knowledge by modifying its memory, …), scalable oversight (training and alignment techniques that are likely to work even with human-level AIs).