Yael Amsterdamer & Daniel Deutch

Query-Guided Data Cleaning (Yael Amsterdamer)

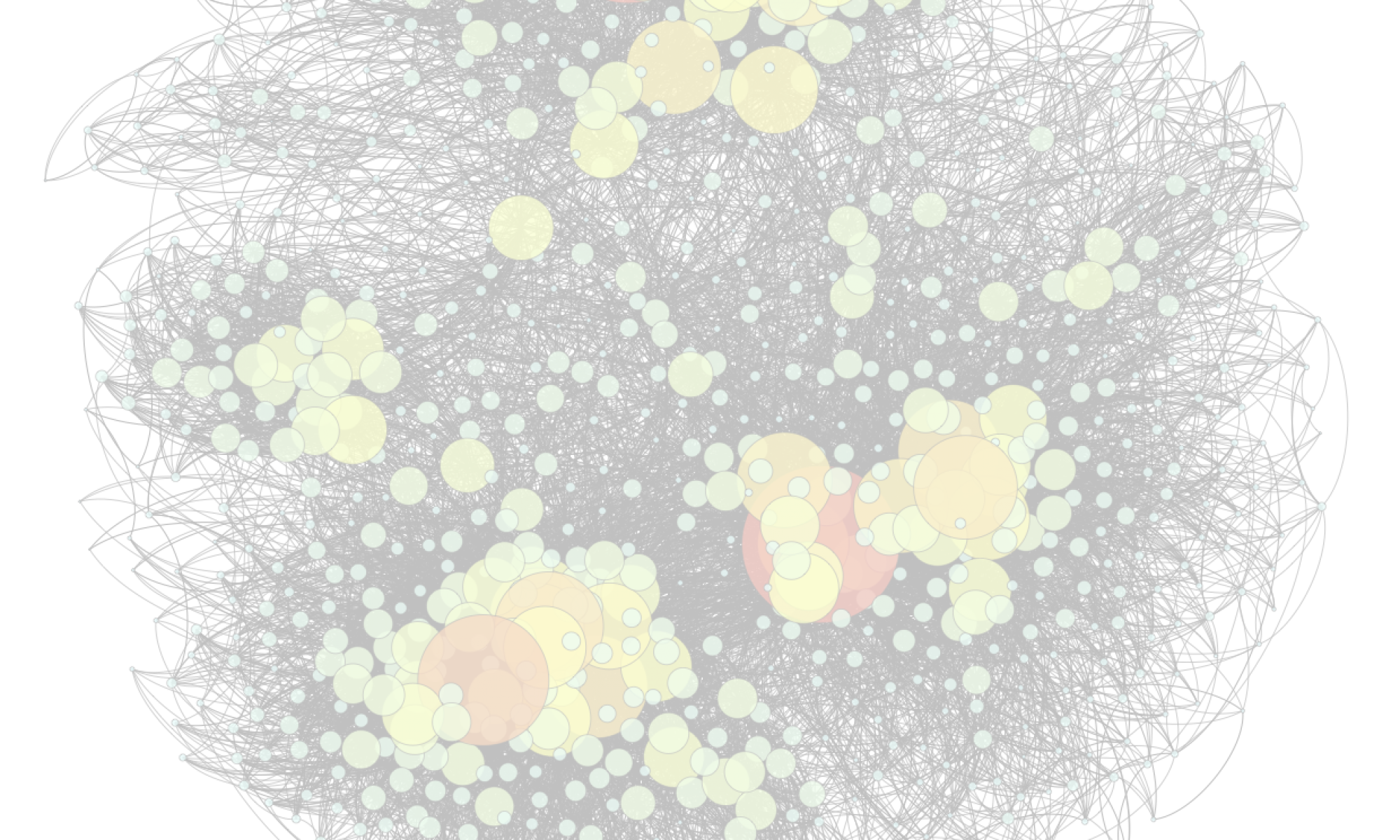

We take an active approach to the cleaning of uncertain databases, by proposing a set of tools to guide the cleaning process. We start with a database whose tuple correctness is uncertain, and with some means of resolving this uncertainty, e.g., crowdsourcing, experts, a trained ML model or external sources. Guided by a query that defines what part of the data is of importance, our goal is to select tuples whose cleaning would effectively resolve uncertainty in query results. In other words, we develop a query-guided process for the resolution of uncertain data. Our approach combines techniques from different fields, including the use of provenance information to capture the propagation of errors to query results and Boolean interactive evaluation to decide which input tuples to clean based on their role in output derivation or effect on uncertainty.

Yael Amsterdamer is a Professor at the Department of Computer Science, Bar-Ilan University, and the head of the Data Management Lab. She received her Ph.D. in Computer Science from Tel-Aviv University, and has been a visiting Scholar at the University of Pennsylvania, Philadelphia, PA and jointly at Télécom Paris and INRIA institute (Paris, France). Her research is in the field of interactive data management spanning topics such as crowd-powered data management, interactive summarization and data cleaning. Her research was awarded multiple competitive grants including the Israeli Science Foundation (ISF) personal grants, the Israeli Ministry of Science (MOST) grant, and the BIU Center for Research in Applied Cryptography and Cyber Security Personal Grant.

Explanations in Data Science (Daniel Deutch)

Data Science involves complex processing over large-scale data for decision support, and much of this processing is done by black boxes such as Data Cleaning Modules, Database Management Systems, and Machine Learning modules. Decision support should be transparent but the combination of complex computation and large-scale data yields many challenges in this respect. Interpretability has been extensively studied in both the data management and in the machine learning communities, but the problem is far from being solved. I will present an holistic approach to the problem that is based on two facets, namely counterfactual explanations and attribution-based explanations. I will demonstrate the conceptual and computational challenges, as well as some main results we have achieved in this context.

Daniel Deutch is a Full Professor in the Computer Science Department of Tel Aviv University. Daniel has received his Ph.D. degree in Computer Science from Tel Aviv University. He was a postdoctoral fellow at the University of Pennsylvania and INRIA France. His research focuses on advanced database applications and web data management, studying both theoretical and practical aspects of issues such as data provenance, analysis of web applications and data, and dealing with data uncertainty. Daniel’s research has been disseminated by papers in the top conferences and journals on data and web data management (VLDB, SIGMOD/PODS, VLDBJ, TODS, etc.) He has won a number of research awards including the VLDB best paper award, the Krill Prize (awarded by the Wolf Foundation) and the Yahoo! Early Career Award. His research was awarded multiple competitive grants including the European Research Council (ERC) Personal Research Grant and grants by the Israeli Science Foundation (ISF, twice), the US-Israel Binational Science Foundation (BSF), the Broadcom Foundation, the Israeli Ministry of Science (MOST), the Blavatnik Interdisciplinary Cyber Research Institute (ICRC), Intuit and Intel.