Boshko Koloski (Jožef Stefan Institute)

Neuro-symbolic representation learning

Data, Intelligence & Graphs Team

Télécom Paris, Institut Polytechnique de Paris, France

Boshko Koloski (Jožef Stefan Institute)

Neuro-symbolic representation learning

Senja Pollak & Boshko Koloski (Jožef Stefan Institute)

Computational analysis of news: methods and applications in keyword extraction, fake-news classification, sentiment and migrations discourse analysis

With the growing volume and influence of digital news media, computational methods for analysing news content have become essential. This talk addresses interconnected challenges spanning keyword extraction, misinformation detection, sentiment classification, and the study of migration discourse. For keyword extraction, we propose SEKE, a mixture-of-experts architecture that achieves state-of-the-art performance while offering interpretability through expert specialisation in distinct linguistic components. To combat misinformation, we demonstrate that ensembling heterogeneous representations from bag-of-words to knowledge graph-enriched neural embeddings substantially improves fake news classification. Extending beyond English, we develop zero-shot cross-lingual methods for both offensive language detection and news sentiment analysis, introducing novel training strategies that significantly outperform prior approaches for less-resourced languages. We apply these computational tools to the socially critical domain of migration discourse, analysing dehumanisation patterns and news framing in Slovene media coverage of Syrian and Ukrainian migrants — uncovering that while discourse has grown more negative over time, it is notably less dehumanising toward Ukrainian migrants. These contributions advance NLP methodology for news analysis while demonstrating its power to illuminate media narratives around pressing societal issues.

Yanzhu Guo

Automatic Evaluation of Human-Written and Machine-Generated Text

With the rapid expansion of digital content, automatic evaluation of textual information has become increasingly crucial. My research addresses the challenge of evaluating and enhancing the quality of both human-written and machine-generated texts. Beginning with human-written texts, we develop an argument extraction system to evaluate the substantiation of scientific peer reviews, providing insights into the quality of peer review in NLP conferences in recent years. Additionally, we assess the factuality of abstractive summarization datasets and propose a data refinement approach that enhances model performance while reducing computational demands. For machine-generated texts, we focus on the underexplored aspects of diversity and naturalness. We introduce a suite of metrics for measuring linguistic diversity and conduct a systematic evaluation across state-of-the-art LLMs, exploring how development and deployment choices influence their output diversity. We also investigate the impact of training LLMs on synthetic data produced by earlier models, demonstrating that recursive training loops lead to diminishing diversity. Finally, we explore the naturalness of multilingual LLMs, uncovering an English-centric bias and proposing an alignment method to mitigate it. These contributions advance evaluation methodologies for natural language generation and provide insights into the interaction between evaluation metrics, dataset quality and language model performance.

Simon Coumes

Contextual knowledge representation for neurosymbolic Artificial Intelligence reasoning

The field of Knowledge Representation and Reasoning is concerned with the representation of information about reality in a form that is both human-readable and machine-processable. It has been a part of artificial intelligence since its inception, and has produced many important formalisms and systems. One key aspect of knowledge is the context in which it is expressed. This has been identified early on in the field and matches with our common experience: understanding a statement or judging its validity often require to know in what context it was meant. Historically, there has been some work aiming at producing logics implementing a general notion of context. None of them saw a lot of adoption, in part because they lack either sufficient expressive power or because they were not sufficiently usable.

This dissertation presents Qiana, a logic of context powerful enough for almost all types of context representation. It is also compatible with various automated reasoning tools, as is demonstrated by the code provided which allows automated reasoning with Qiana. This makes use of the pre-existing theorem prover Vampire — though any other compatible prover can freely be used instead. By providing a powerful logic for context representation with the possibility of concrete computations without (much) overhead, Qiana paves the way for larger prevalence of logics of context, making it possible to build other reasoning on top of such logics like has been done –for example– for epistemic logics or description logics.

Le-Minh Nguyen (Japan Advanced Institute of Science and Technology)

SPECTRA: Faster Large Language Model Inference with Optimized Internal and External Speculation

Inference with modern Large Language Models (LLMs) is both computationally intensive and time-consuming. While speculative decoding has emerged as a promising solution, existing approaches face key limitations. Training-based methods require the development of a draft model, which is often difficult to obtain and lacks generalizability. On the other hand, training-free methods provide only modest speedup improvements.

In this work, we introduce SPECTRA — a novel framework designed to accelerate LLM inference without requiring any additional training or modifications to the original LLM. SPECTRA incorporates two new techniques that efficiently leverage both internal and external speculation, each independently outperforming corresponding state-of-the-art (SOTA) methods. When combined, these techniques deliver up to a 4.08× speedup across a variety of benchmarks and LLM architectures, significantly surpassing existing training-free approaches. The implementation of SPECTRA is publicly available.

Biography: Le-Minh Nguyen is currently a Professor of the School of Information Science and the director of the Interpretable AI Center at JAIST. He leads the Machine Learning and Natural Language Understanding Laboratory at JAIST. He is currently taking his sabbatical at Imperial College London, UK (Until April 2026). His research interests include machine learning & deep learning, natural language processing, legal text processing, and explainable AI. He serves as an action editor of TACL (a leading journal in NLP), a board member of VLSP (Vietnamese language and speech processing), and an editorial board member of AI &Law, Journal of Natural Language Processing (Cambridge). He is a steering committee of Juris-informatics (Jurisin) in Japan – a research area that studies legal issues from informatics.

François Amat

Mining Expressive Cross-Table Dependencies in Relational Databases

This thesis addresses the gap between what relational database schemas declare and the richer set of cross-table rules that actually govern real-world data. It introduces MATILDA, the first deterministic system capable of mining expressive first-order tuple-generating dependencies (FO-TGDs) with multi-atom heads, existential witnesses, and recursion directly from arbitrary relational databases, using principled, database-native definitions of support and confidence. MATILDA uncovers hidden business rules, workflow constraints, and multi-relation regularities that schemas alone cannot capture, while ensuring reproducible results through canonicalized search and tractable pruning guided by a constraint graph. To understand when simpler formalisms suffice, the thesis also presents MAHILDA, a relational Horn-rule baseline equipped with disjoint semantics to prevent self-justifying recursion. Overall, the work shows that expressive rule mining on realistic databases is both feasible and insightful, enabling more systematic, explainable, and schema-grounded analyses of complex relational data.

Cristian Santini (University of Macerata)

Entity Linking and Relation Extraction for Historical Italian Texts: Challenges and Potential Solutions

Entity Linking and Relation Extraction enable the automatic identification of named entities mentioned in texts, along with their relationships, by connecting them to external knowledge graphs such as Wikidata. While these techniques work well on modern documents, applying them to historical texts presents significant challenges due to the diachronic evolution of language and limited resources for training computational models. This seminar presents recent work on developing methods and datasets for processing historical Italian texts. It will discuss the creation of a new benchmark dataset extracted from digital scholarly editions covering two centuries of Italian literary and political writing. The talk will then present approaches that enhance entity disambiguation by incorporating temporal and contextual information from external Wikidata. Finally, it will detail a method for automatically constructing knowledge graphs from historical correspondence that combines multiple language models in sequence, demonstrating how these technologies can facilitate the exploration and understanding of historical archives without requiring extensive manual annotation or model training.

Yiwen Peng

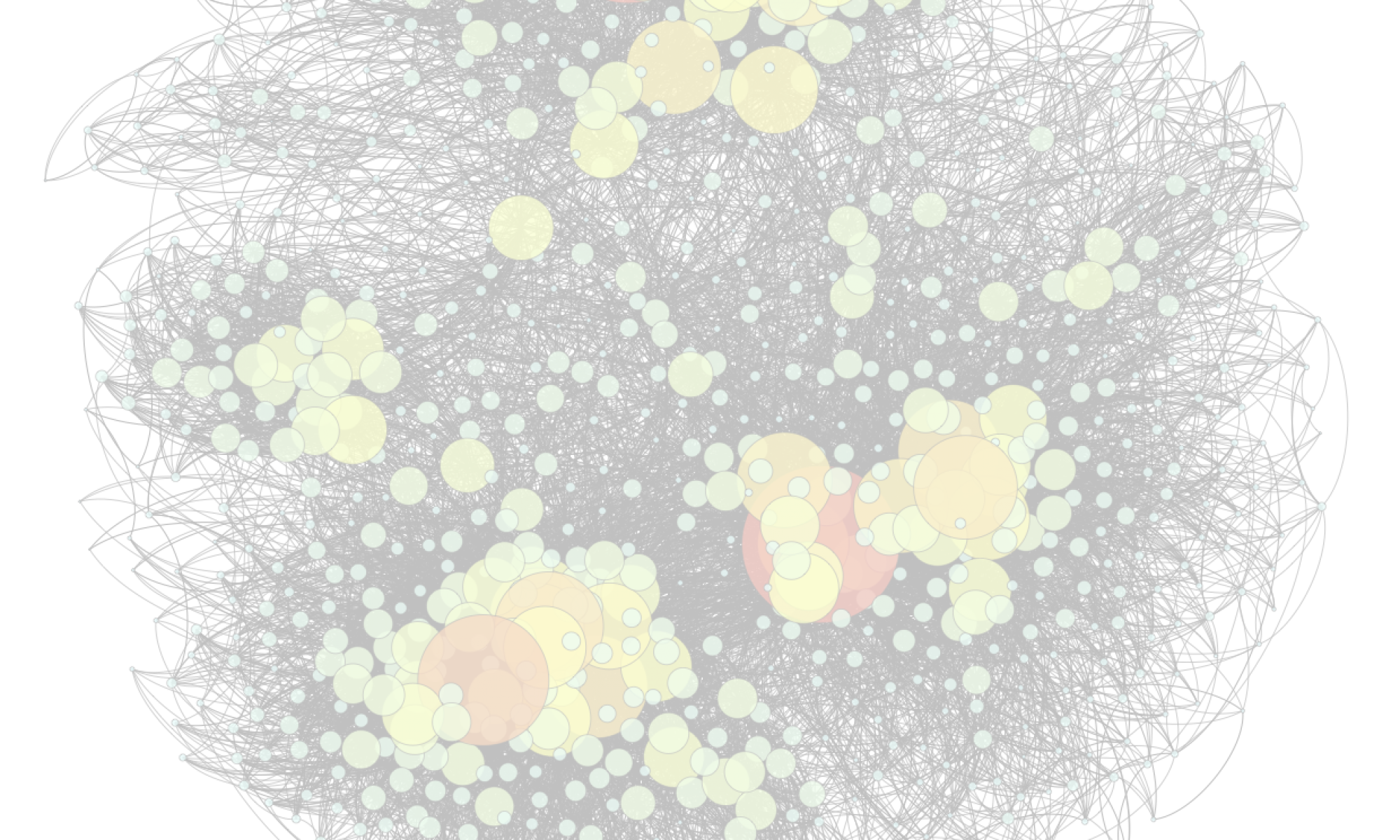

FLORA: Unsupervised Knowledge Graph Alignment by Fuzzy Logic

Knowledge graph alignment is the task of matching equivalent entities (that is, instances and classes) and relations across two knowledge graphs. Most existing methods focus on pure entity-level alignment, computing the similarity of entities in some embedding space. They lack interpretable reasoning and need training data to work. To solve these issues, we introduce FLORA, a simple yet effective method that (1) is unsupervised, i.e., does not require training data, (2) provides a holistic alignment for entities and relations iteratively, (3) is based on fuzzy logic and thus delivers interpretable results, (4) provably converges, (5) allows dangling entities, i.e., entities without a counterpart in the other KG, and (6) achieves state-of-the-art results on major benchmarks.

Fabian Suchanek

Fabian spent the month of June 2025 at the Sapienza in Rome, and will tell us about his experience.

Adrien Coulet

Data- and knowledge-driven approaches for step-by-step guidance to differential diagnosis

Diagnosis guidelines provide recommendations based on expert consensus that cover the majority of the population, but often overlook patients with uncommon conditions or multiple morbidities. We will present and compare two alternative approaches that provide a step-by-step guidance to the differential diagnosis of anemia and lupus. The first approach relies on reinforcement learning and observational data. The second on large langage models and domain knowledge.

Zacchary Sadeddine

Meaning Representations and Reasoning in the Age of Large Language Models

This thesis explores how to make large language models (LLMs) more reliable and transparent in their reasoning. It first examines around fifteen societal issues related to these models, such as disinformation or user overreliance, and then investigates symbolic structures from linguistics and how they can be used to improve the performance and transparency of LLMs. It presents VANESSA, a reasoning neuro-symbolic system that combines the power of neural models with the rigor of symbolic reasoning, achieving performance comparable to LLMs while remaining transparent. Finally, it addresses the problem of verifying LLM outputs by introducing a step-by-step verification benchmark, paving the way for more interpretable, controllable and trustworthy artificial intelligence systems.