Yanzhu Guo

Automatic Evaluation of Human-Written and Machine-Generated Text

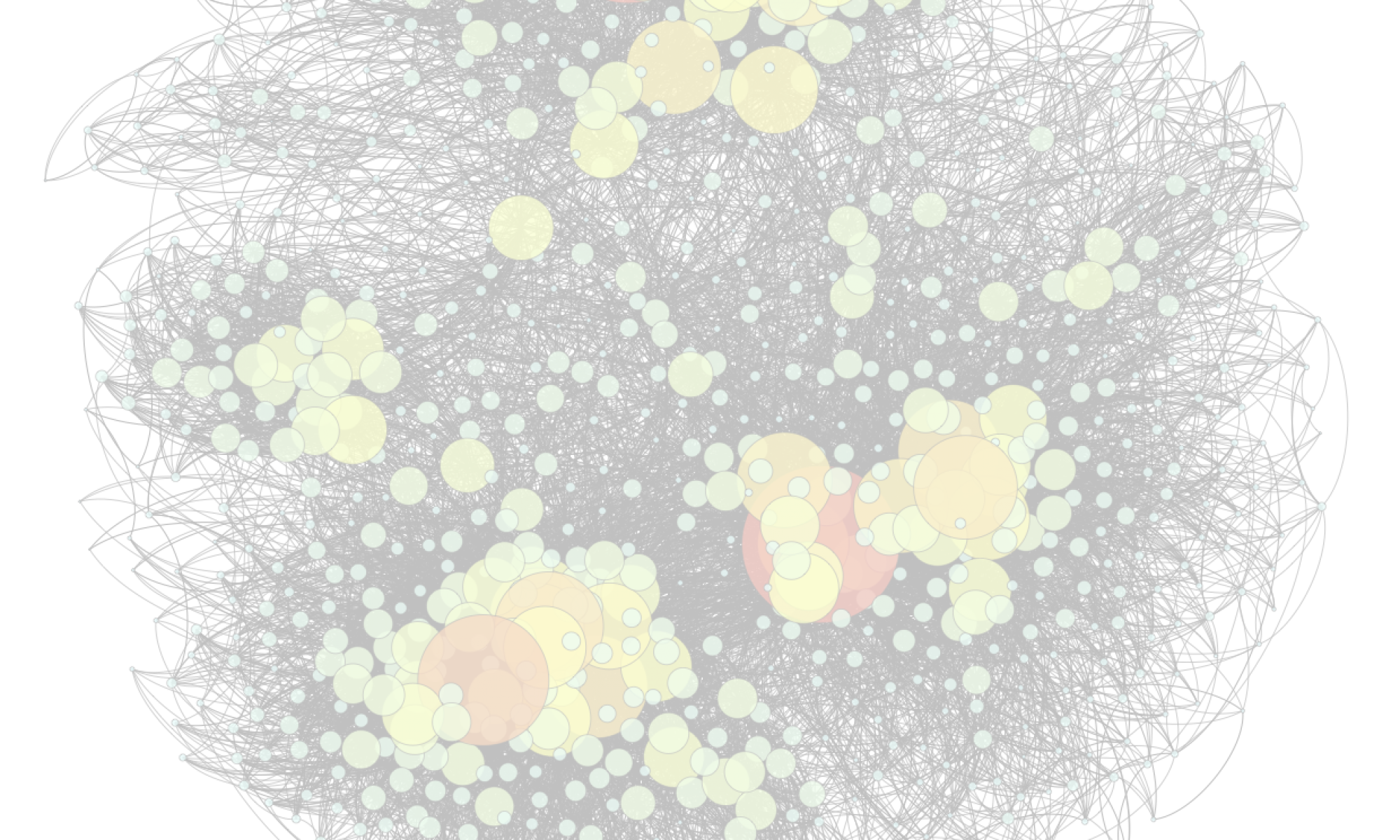

With the rapid expansion of digital content, automatic evaluation of textual information has become increasingly crucial. My research addresses the challenge of evaluating and enhancing the quality of both human-written and machine-generated texts. Beginning with human-written texts, we develop an argument extraction system to evaluate the substantiation of scientific peer reviews, providing insights into the quality of peer review in NLP conferences in recent years. Additionally, we assess the factuality of abstractive summarization datasets and propose a data refinement approach that enhances model performance while reducing computational demands. For machine-generated texts, we focus on the underexplored aspects of diversity and naturalness. We introduce a suite of metrics for measuring linguistic diversity and conduct a systematic evaluation across state-of-the-art LLMs, exploring how development and deployment choices influence their output diversity. We also investigate the impact of training LLMs on synthetic data produced by earlier models, demonstrating that recursive training loops lead to diminishing diversity. Finally, we explore the naturalness of multilingual LLMs, uncovering an English-centric bias and proposing an alignment method to mitigate it. These contributions advance evaluation methodologies for natural language generation and provide insights into the interaction between evaluation metrics, dataset quality and language model performance.