Gabriel Damay

Dynamic Decision Trees and Community-based Graph Embeddings: towards Interpretable Machine Learning

Machine learning is the field of computer science that interests in building models and solutions from data without knowing exactly the set of instructions internal to these models and solutions. This field has achieved great results but is now under scrutiny for the inability to understand or audit its models among other concerns. Interpretable Machine Learning addresses these concerns by building models that are inherently interpretable. This thesis contributes to Interpretable Machine Learning in two ways.

First, we study decision trees. This is a very popular group of machine learning methods for classification problems and it is interpretable by design. However, real world data is often dynamic, but few algorithms can maintain a decision tree when data can be both inserted and deleted from the training set. We propose a new algorithm called FuDyADT to solve this problem.

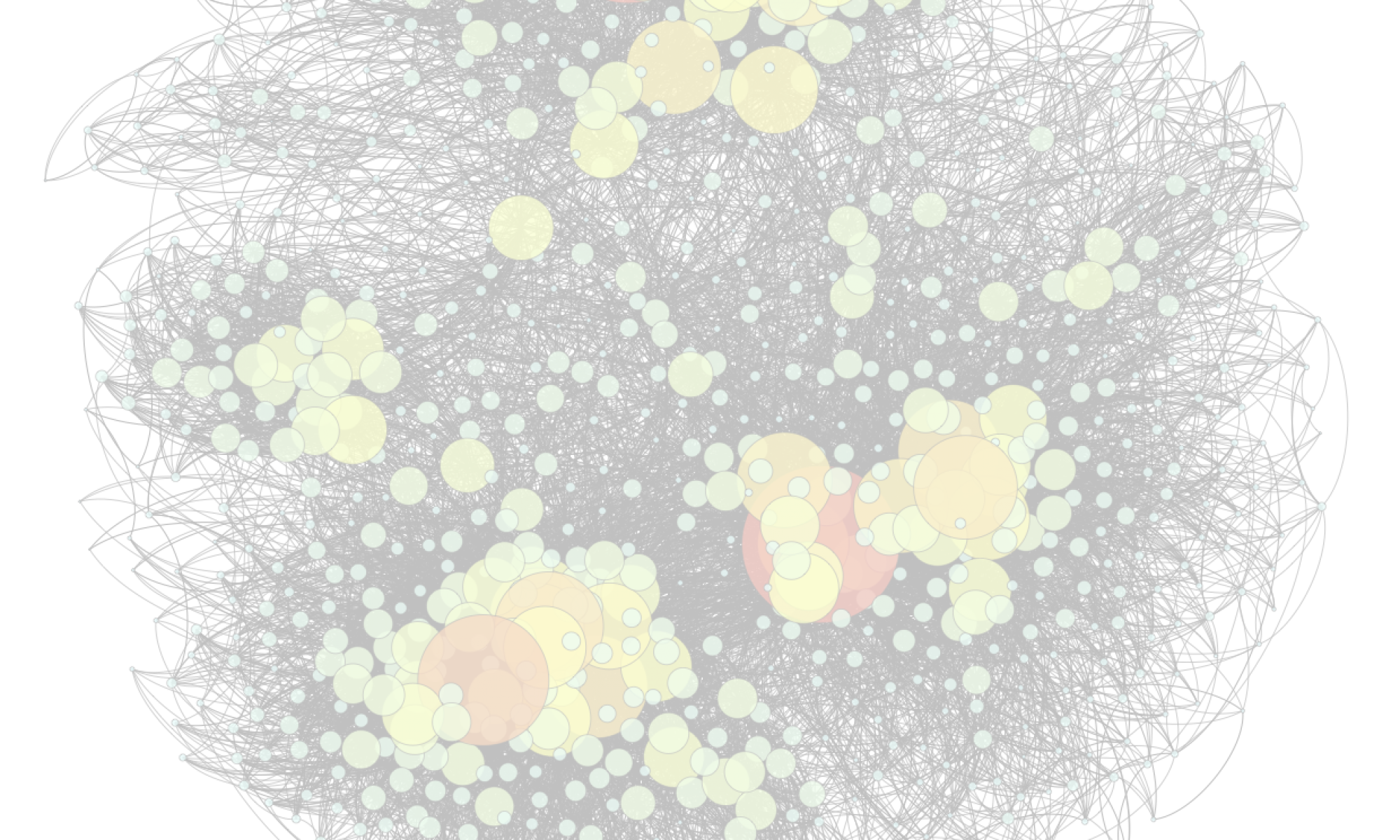

Second, when data are represented as graphs, a very common machine learning technique called “embedding” consists in projecting them onto a vectorial space. This kind of method however is usually not interpretable. We propose a new embedding algorithm called PaRFaITe based on the factorization of the Personalized PageRank matrix. This algorithm is designed to provide interpretable results.

We study both algorithms theoretically and experimentally. We show that FuDyADT is at least comparable to state-of-the-art algorithms in the usual setting, while also being able to handle unusual settings such as deletions of data. PaRFaITe on the other hand produces embedding dimensions that align with the communities of the graph, making the embedding interpretable.